Difference between revisions of "RockpiN10/hardware/npu"

(Created page with "{{rockpiN10_header}} {{Languages|rockpiN10}} __NOTOC__ ROCK Pi N10 > Hardware > NPU NPU is the pro...") |

(No difference)

|

Latest revision as of 02:38, 18 March 2020

ROCK Pi N10 > Hardware > NPU

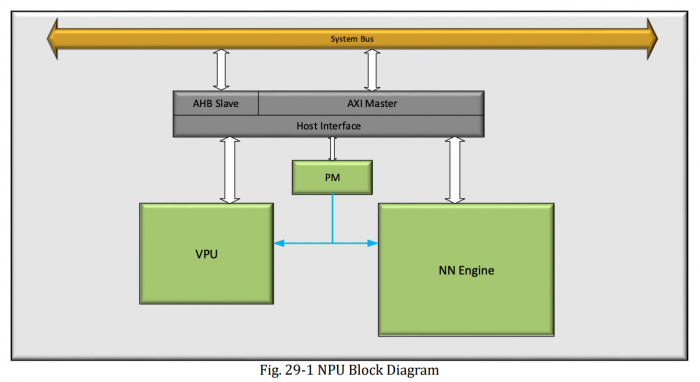

NPU is the process unit which is dedicated to neural network. It is designed to accelerate the neural network arithmetic in field of AI (artificial intelligence) such as machine vision and natural language processing. The variety of applications for AI is expanding, and currently provides functionality in a variety of areas, including face tracking as well as gesture and body tracking, image classification, video surveillance, automatic speech recognition (ASR) and advanced driver assistance systems (ADAS).

NPU in RK3399pro supports the following features:

Host interface

- 32bit AHB interface used for configuration only support single

- 128bit AXI interface used to fetch data from memory

Neural Network

- Support integer 8, integer 16, float 16 convolution operation

- 1920 MAD (multiply-add units) per cycle (int 8)

- 192 MAD per cycle (int 16)

- 64 MAD per cycle (float 16)

- Support Liner, MIMO, Fully Connected, Fully Convolution

- Unlimited network size (bound by system resource)

- Inference Engine : TensorFlow backend, OpenCl, OpenVX, Android NN backend

- Support network sparse coefficient decompression

- Support Max, average pooling

- Max pooling support 2x2, 3x3, stride <= min(input width, input height)

- Local average pooling size <= 11x11

- Support unpooling

- Support batch normalize, l2 normalize, l2 normalize scale, local response normalize

- Support region proposal

- Support permute, reshape, concat, depth to space, space to depth, flatten, reorg, squeeze and split

- Support priorbox layer

- Support Non-max Suppression

- Support ROI pooling

- Convolution size NXN , N <=11*stride, stride <= min(input width, input height)

- Support dilate convolution, N <=11*stride, stride <= min(input width, input height), dilation <1024

- Support de-convolution, N <=11*stride, stride <= min(input width, input height)

- Support Elementwise addition, div, floor, max, mul, scale, sub

- Support elu, leaky_relu, prelu, relu, relu1, relu6, sigmod, softmax, tanh

- Support LSTM, RNN

- Support channel shuffle

- Support dequantize, dropout.

- Include embedded lookup table

- Support hashtable lookup

- Support lsh projection

- Support svdf

- Support reserve

Neural Network Engine

As the unit name, NN Engine is the main process unit for Neural Network arithmetic. This unit Provides parallel convolution MAC for recognition functions and int8, int16 and fp16 are supported. Active functions and pooling such as leaky_relu, relu, relu1, relu6, sigmod, tanh are also processed in NN Engine. So NN Engine is mainly serve for convolution neural network and fully connected network.

Vector Processing Unit

Vector Processing Unit can be the supplement for NN Engine. The programmable SIMD processor unit is included which perform as a Compute Unit for OpenCL. VPU provides advanced image processing functions. For example, in one cycle, VPU can perform one MUL/ADD instruction or a dot product of two 16-component values. Most element wise operations and matrix operations are processed in VPU.